| Issue 4 |

I research robotics. What do you imagine that means? Do I spend time attending a babbling, annoying, shiny-gold humanoid? No, that is the stuff of science fiction. Perhaps I'm concerned with a "River Dance" troop of robot arms as they perform their synchronised assembly of a car? No, this is a well-understood area - witnessed by industrial adoption of the technology. In fact I'm concerned with a problem for which the robot mechanism (body) is largely irrelevant. The problem on which I work is equally central to the future of robots operating on Mars, the ocean floor, in nuclear facilities, in your living room, down mines and inside B&Q warehouses. The problem is this: how does a mobile robot know where it is?

Having a machine answer "where am I?" is an information engineering problem that has been at the heart of mobile robotics research for over two decades, and while fine progress has been made, we still do not possess the machines we thought were just "around the corner" in the early 1980s. We still hope to build autonomous mobile robots that operate in both "everyday" and exotic locations. We need robots to explore places we as humans can't reach or are unwilling to work in (and that includes economic as well as safety reasons). Not getting lost requires answering "where am I?" at all times. But how can robots do this without a map? Can they make one for themselves? - Yes, but as yet, not reliably.

|

| Figure 1: "Marge" - an all terrain autonomous vehicle with 2D and 3D laser scanners. Her partner, "Homer", is not shown. The cube near the front bumper is the 2D laser scanner used to build the basement map in Figure 2. The second cube is mounted on a "nodder" and allows the vehicle to scan its environment in 3D. |

But let's back up, why would they need to make a map in the first place? Why not use GPS or "tell" the machines in advance what the operating region looks like - i.e. give them the map? Well, for one thing GPS doesn't work sub-sea, underground, in buildings or on Mars and it is pretty flaky in built up areas so we can discount that. It is true that in some situations we could (and do) provide the vehicle with an a priori map often in the form of laser-reflective strips or beacons stuck at known locations around a factory, port or hospital. The problem is that it is not always possible, let alone convenient or cheap, to install this infrastructure, and once installed it is inflexible and imposes unnatural constraints on the workspace. You don't want a pallet delivery system to fail simply because someone obscured a few patches on a wall. Imagine the increase in autonomy that would result from being able to place a machine in an a priori unknown environment and have it learn a map of its environment as it moves around. It could then use this map to answer the "where am I?" question - to localise. This is called the Simultaneous Localisation and Mapping (SLAM) problem and is stated as follows:

"How can a mobile robot operate in an a priori unknown environment and use only onboard sensors to simultaneously build a map of its workspace and use it to navigate?"

The tricky part is that this is a chicken and egg problem: to build a map you need to know where you (the observer) are but at the same time you need a map to figure out where you are. The simplicity of the SLAM problem statement is beguiling. It is after all something that we humans, with varying degrees of success, do naturally - for example when stepping out of a hotel foyer in a new city. Yet SLAM in particular is a topic that has challenged the robotics research community for over a decade.

There is a strong commercial incentive to use SLAM in sectors already well populated with mobile robotics. The number of service robots in commercial use is substantial and growing fast. They appear in a multitude of guises around the world - hospital courier systems, warehouse and port management, manufacturing and security to name but a few. The United Nations Economic Commission for Europe (UNECE) predicts expenditure on personal and service robotics will grow from $660m US in 2002 to over $5b US in 20051 (excluding military expenditure which is a vast sector in itself). However, almost without exception, and as I've already mentioned, present day service robots require costly and inconvenient installation procedures and are intolerant of changes in the workspace. SLAM offers a viable way to entirely circumvent these issues and further fuel the commercial exploitation and development of mobile robotics.

From an information engineering perspective the difficulties arise from two sources - uncertainty management and perception. Sensors are noisy and physical motion models are incomplete. The combination of uncertain motion and uncertain sensing leads inevitably to uncertain maps. A key challenge is how to manage this pervasive uncertainty in a principled fashion and, most importantly, in real-time. There is then the issue of suitable perception for SLAM - what aspects of the robot's workspace should be sensed and used to build the map with which to localise? How should they be sensed and what is the interplay between sensing and map representation? These are some of the questions that the Mobile Robotics Research Group is trying to answer.

|

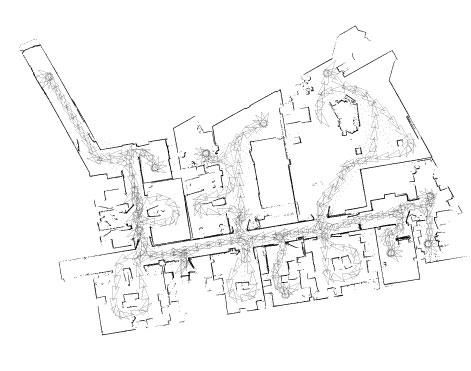

| Figure 2: A SLAM-built map of the Information Engineering Building at Oxford. The small triangles on the grey trace mark the path of the robot around the building. The map is built from scratch and develops as the vehicle moves. The same map is used for navigation. |

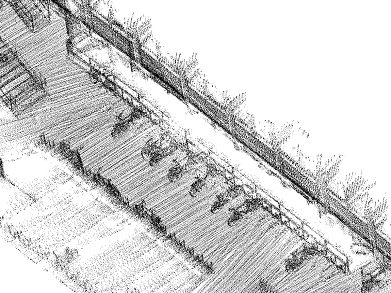

Figure 2 is a map of the Information Engineering Building basement at Oxford built using a SLAM algorithm. The vehicle started in the top right hand corner and drove around the building entering each room sequentially. (The path planning was done by a human in this case although we do have autonomous path planners/explorers.) A key point is that the size of the map grows with time, as can be seen by the video at the web address given below2. The basement contained piles of builder's rubble and sundry non-regular equipment all of which was mapped precisely. This map was built with a 2D laser scanner mounted on our robot "Marge" shown in Figure 1. We are now looking beyond 2D-only mapping to building full 3D maps using combinations of laser, camera and radar data. Figure 3 shows a rendering of a map built of part of the exterior of the Thom building. In our very latest work, "Marge" has mapped the whole Keble triangle.

|

| Figure 3: A section of a 3D SLAM map - the bicycle racks of the Thom building |

So what are the open questions? If it is now possible to build these maps on-the-fly and have machines use them to navigate, then what is left to do? Well, there is a problem with robustness. The machines may work for an hour or so but then things tend to go horribly wrong. A particular problem is correct "loop-closing" - recognising that the vehicle has returned to a previously visited (mapped) location.

Central to our approach is local scene saliency detection - finding what is interesting and "stands out" in a particular block of 3D laser data or what is remarkable about a given camera image. Intuitively, if we build a database consisting of "interesting" things and the times they were observed we could query the database with the current scene; if positive matches are returned then there is a good chance we have been here before. As always the devil is in the detail. It is unlikely that exactly the same place is revisited and so onboard sensors will present a different view of the same place the second time round. Now, we as humans are pretty clever at understanding multiple views of the same scene - it isn't so easy to endow a machine with the same ability.

In one approach we are combining sensed geometry, resulting from 2D and 3D laser scanners with texture and pattern information from cameras, to build a database of complex, high-dimensional scene descriptors. We use these rich descriptors to disambiguate the loop-closing problem. One can think of this as loop-closing by "recognising" a previously visited location because of how it looks, how it appears. This is in contrast to having the robot blindly believe an internal idea (estimate) of position and only entertaining the possibility of loop closure when this estimate coincides with a previously mapped area.

|

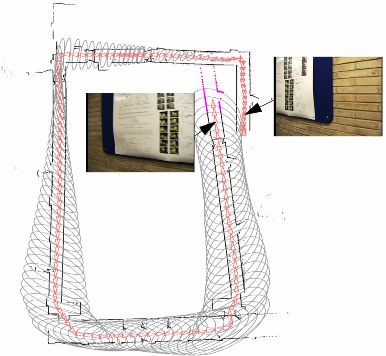

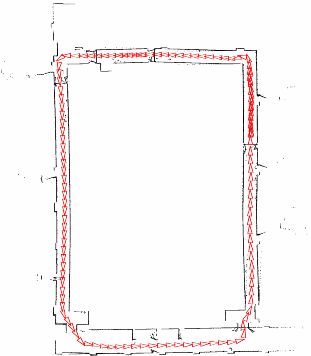

| Figures 4a and b: The effect of using visual saliency in autonomous mapping. Two views of the same poster are detected and used to suggest a "loop closing event" |

To illustrate, Figures 4a and b above show a map of a looping corridor about 100 m long. The left hand figure shows the map built using geometrical mapping alone - clearly it has missed the loop closure event. (The ellipses represent estimated three-sigma bounds on vehicle location.) The problem was caused by a small angular error while coming through some swing doors on the bottom right. The map on the right of the figure is produced when the algorithm is presented with sequential views from an onboard camera. Without prompting, it spots the similarity between two posters (interesting texture in the context of the rest of the wall). The fact that the images have vastly different time-stamps suggests the vehicle is revisiting an already mapped area. The validity of this tentative loop closure is checked and accepted by the SLAM algorithm, which then modifies the map and vehicle location to produce the crisp, correct map on the right. Importantly, and in contrast to the status quo in SLAM, the possibility of loop closure is deduced without reference to location or map estimates. Were this not so we would be using a potentially flawed map and position estimate to make decisions about data interpretation - hardly a robust approach!

Alas, there is not space to describe the other approaches we are taking to increase robustness in mobile robot navigation. Suffice it to say it is a fascinating area in which to be working and indeed one that has seen a resurgence of both research and industrial interest in the UK and internationally of late. High profile events like the Darpa Grand Challenge (in which autonomous machines are required to navigate the Mojave desert), Martian rovers, deep-sea rescue and oil surveying are never far away from the science press. But if these machines are really to fulfil their potential for improving our lives, scientific knowledge and operational reach, they can't get lost. They have to operate for weeks and months not hours and minutes. This is not simply a matter of better software, better hardware or better sensors. It comes down to smarter perception and smarter information engineering. That is what we are working on.

| << Previous article | Contents | Next article >> |

| SOUE News Home |

Copyright © 2005 Society of Oxford University Engineers |

SOUE Home |